So, I finally decided to try to track down the microphone issues. As always, this involved descending into the rabbit hole for a little while. My first goal was to get a (slightly) newer kernel. I was running gentoo-sources-3.10.7 from a genkernel and I wanted to run gentoo-sources-3.11.6 with a manual configuration. Some of my hardware (on my MSI Z77A-GD65 LGA 1155 Intel Z77 motherboard) is listed in my sound wrangling write-up. I also mentioned my irritation at mapping between kernel configuration options (aka, navigating menuconfig), CONFIG_FOO (as listed in .config), and kernel module names (for example, from lspci -k). Using my hardware, I’ll show a somewhat workable way to get from hardware to a menuconfig option.

Spelunking Hardware to Kernel Config Menu Options

# lspci

00:00.0 Host bridge: Intel Corporation Xeon E3-1200 v2/3rd Gen Core processor DRAM Controller (rev 09)

00:01.0 PCI bridge: Intel Corporation Xeon E3-1200 v2/3rd Gen Core processor PCI Express Root Port (rev 09)

00:02.0 VGA compatible controller: Intel Corporation Xeon E3-1200 v2/3rd Gen Core processor Graphics Controller (rev 09)

00:14.0 USB controller: Intel Corporation 7 Series/C210 Series Chipset Family USB xHCI Host Controller (rev 04)

00:16.0 Communication controller: Intel Corporation 7 Series/C210 Series Chipset Family MEI Controller #1 (rev 04)

00:19.0 Ethernet controller: Intel Corporation 82579V Gigabit Network Connection (rev 04)

00:1a.0 USB controller: Intel Corporation 7 Series/C210 Series Chipset Family USB Enhanced Host Controller #2 (rev 04)

00:1b.0 Audio device: Intel Corporation 7 Series/C210 Series Chipset Family High Definition Audio Controller (rev 04)

00:1c.0 PCI bridge: Intel Corporation 7 Series/C210 Series Chipset Family PCI Express Root Port 1 (rev c4)

00:1c.6 PCI bridge: Intel Corporation 7 Series/C210 Series Chipset Family PCI Express Root Port 7 (rev c4)

00:1c.7 PCI bridge: Intel Corporation 7 Series/C210 Series Chipset Family PCI Express Root Port 8 (rev c4)

00:1d.0 USB controller: Intel Corporation 7 Series/C210 Series Chipset Family USB Enhanced Host Controller #1 (rev 04)

00:1f.0 ISA bridge: Intel Corporation Z77 Express Chipset LPC Controller (rev 04)

00:1f.2 SATA controller: Intel Corporation 7 Series/C210 Series Chipset Family 6-port SATA Controller [AHCI mode] (rev 04)

00:1f.3 SMBus: Intel Corporation 7 Series/C210 Series Chipset Family SMBus Controller (rev 04)

01:00.0 VGA compatible controller: NVIDIA Corporation GF114 [GeForce GTX 560 Ti] (rev a1)

01:00.1 Audio device: NVIDIA Corporation GF114 HDMI Audio Controller (rev a1)

03:00.0 IDE interface: ASMedia Technology Inc. ASM1061 SATA IDE Controller (rev 01)

04:00.0 FireWire (IEEE 1394): VIA Technologies, Inc. VT6315 Series Firewire Controller (rev 01)

You can also get the kernel modules used by the hardware with lspci -k. For brevity, I’ll just grab the modules:

# lspci -k | grep use | awk '{ print $5; }' | sort | uniq

ahci

e1000e

ehci-pci

i915

nvidia

pcieport

snd_hda_intel

xhci_hcd

Not so awful. Now, as I was cruising the web looking for some info, I stumbled across the following helpful command-line to tie “most” module names back to CONFIG_ lines (I lost it, but I found the URL in my browsing history): grep -R –include=Makefile ‘\bNAME\.o\b’. For example:

# grep -R --include=Makefile '\be1000e\.o\b'

drivers/net/ethernet/intel/e1000e/Makefile:obj-$(CONFIG_E1000E) += e1000e.o

This one isn’t too surprising. We see that e1000e.o is tied to CONFIG_E1000E. In turn, we can jump into menuconfig (or xconfig) and search for CONFIG_E1000E (in menuconfig, type a forward-slash “/”; in xconfig, go to the find option). In either case, we get a most useful piece of information:

Prompt: Intel(R) PRO/1000 PCI-Express Gigabit Ethernet support

Location:

-> Device Drivers

-> Network device support (NETDEVICES [=y])

-> Ethernet driver support (ETHERNET [=y])

-> Intel devices (NET_VENDOR_INTEL [=y])

Now, the only issue is navigating to that spot and enabling the feature as a module or as a built-in. Not too shabby. Hopefully, I won’t forget in six or twelve months when I think about building a kernel again.

Two other useful sources of information (note, I plugged in my once-in-a-while USB devices):

# lsusb

Bus 001 Device 002: ID 8087:0024 Intel Corp. Integrated Rate Matching Hub

Bus 002 Device 002: ID 8087:0024 Intel Corp. Integrated Rate Matching Hub

Bus 003 Device 002: ID 046d:08ad Logitech, Inc. QuickCam Communicate STX

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 002 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub

Bus 001 Device 003: ID 046d:c506 Logitech, Inc. MX700 Cordless Mouse Receiver

Bus 002 Device 005: ID 04a9:220e Canon, Inc. CanoScan N1240U/LiDE 30

Bus 002 Device 006: ID 091e:2459 Garmin International GPSmap 62/78 series

# aplay -l

**** List of PLAYBACK Hardware Devices ****

card 0: PCH [HDA Intel PCH], device 0: ALC898 Analog [ALC898 Analog]

Subdevices: 1/1

Subdevice #0: subdevice #0

card 0: PCH [HDA Intel PCH], device 1: ALC898 Digital [ALC898 Digital]

Subdevices: 1/1

Subdevice #0: subdevice #0

The camera uses gspca_zc3xx . Which you can track, using the steps above, to this menu option:

Multimedia support -->

[*] Cameras/video grabbers support

[*] Media USB Adapter

--> [M] GSPCA based webcams

--> [M] ZC3XX USB Camera Driver

I started with a kernel seeds 3.6.11 kernel as my base build, and I added a few things. And removed a few things. I made a diff, which I need to figure out how to conveniently host and attach to this post. <<link to diff>>

NVIDIA Wrangling

So, that got me a running kernel. Next, I realized the reason I hadn’t gone to a 3.6.11 kernel sooner. NVIDIA’s official drivers (which I need for a dedicated GPU computing card) do not support >=3.6.11 kernels right now. So, I needed to grab a patch for the nvidia drivers. Fortunately, one was available. Grab the tar file that is attached to that link and you can get suitable patches for several versions of the nvidia kernel drivers. I was expecting to have to (1) hack a nvidia-drivers ebuild and (2) futz with a /usr/local/portage type solution … but, low and behold, all I had to do was copy the patch to (I only wanted to patch one specific version of the drivers; I’m using 325.15):

/etc/portage/patches/x11-drivers/nvidia-drivers-325.15/get_num_physpages_325-331.patch

and emerge -avq nvidia-drivers. Poof. Almost easy. I made some use of emerge @module-rebuild and emerge @x11-module-rebuild and had a working X11 very shortly thereafter.

Last but not least ….

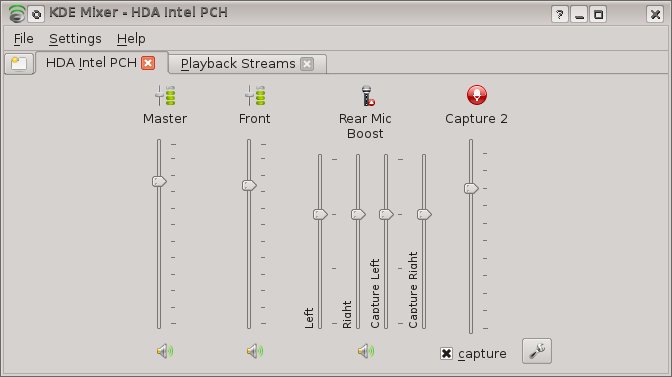

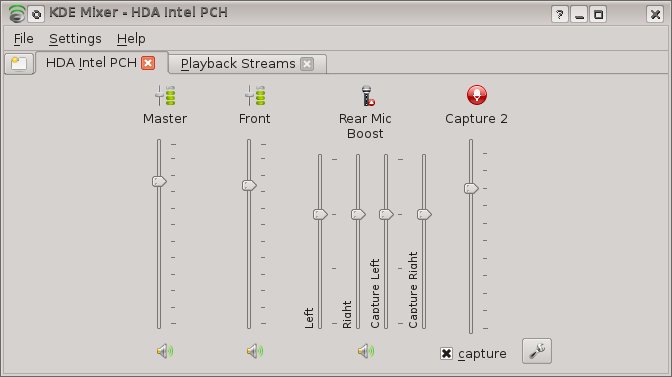

If your memory is good, you might recall that the whole reason I undertook this project was to get my microphone working. Who knows if the kernel upgrade helped, but with some tweaking I got it working tolerably in Skype. This was pure, stubborn, knob fiddling. Here are the final fiddles. The first image is from kmix (you can do the same in alsamixer, this was easier for my screen grab). I’m not sure if I mixed-and-matched with Capture 2 before. Also, I tried different combinations of Rear Mic Boost to eliminate a good bit of static in my system, but with other fixes, this flat moderate level seemed to do fine. One oddity: kmix doesn’t show the correct PCM setting (which you can see in alsamixer). Yes, there is a PCM channel you can select under Setting->Configure Channels. But it isn’t the right PCM channel. Odd.

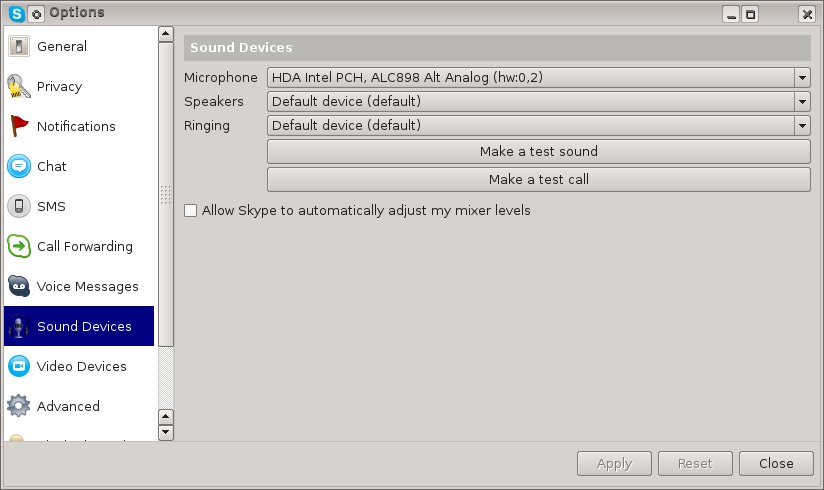

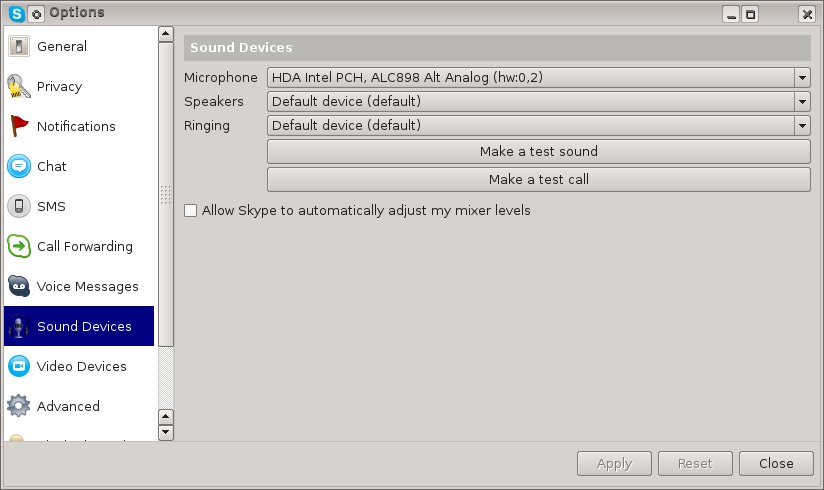

The second image is from Skype. I don’t think I had noticed an Analog option before. What’s more, it had to be the “Alt Analog”. I don’t know if I tried it or if it was available due to driver differences in the kernel.

After all that, my Skype Test Call finally worked. Yay. A practical, if not exciting, denouement.

After all that, my Skype Test Call finally worked. Yay. A practical, if not exciting, denouement.